The Big Data

(Hadoop) market is forecast to grow at a compound annual growth rate (CAGR) 58%

surpassing $16 billion by 2020.

Most of

the organization are stuck in the phase of a "Go" "No Go" decision.

Having said that the major hurdles /challenges are mainly the following

1- Hadoop

Infrastructure (Hardware, Network etc.) is cheap but is kind of complicated to

operate and maintain specially as the cluster grows e.g. 100-150 machines ++.

2- For a

Non-social media organization (e.g. Healthcare, Insurance, Banks or

Telecommunications the use cases are not large enough to really shift from an

existing MPP (Teradata , Netteza ,Oracle etc.) to a Distributed System

3-

"Real" Hadoop Experts are hard to find and also do not come

cheap along with another challenge that the learning curve for Big Data

technologies is very steep.

4- Data

Security in a "Distributed File system" lacks lot of features where

the traditional RDBMS or MPP systems have been matured enough.

5 -

Corporate have already spent a lot of budget in purchasing, maintaining and

implemented some expensive DWH/Data integration /Data analytics solutions and cannot

just scrap it away to try something new.

6-

Business Users/ Data Analysts Reluctance to shift from "SQL " (e.g.

Traditional RDBMS or SAS etc.) based analysis to "Programming" (e.g.

apache Hive, Pig, Impala, Python etc.) oriented data analysis approach and due

to a slow learning curve it’s very hard to replace the whole man power with new

Hadoop experts or train such staff to new technologies.

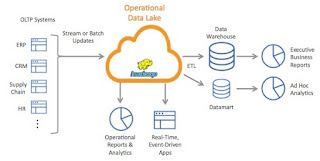

Building

up the Big Data infrastructure in chunks and taking baby steps to put your test

cases under a production workload and evaluate the ROI is the only key to overcome

these challenges. The key is "Start simple " and later

"Expand" and finally with the ROI "shift".

Amazon

Web Services has a considerable cheap, quick and easy "Service

Oriented" architecture that take care of the above challenges and help you

focus on your "Data Analytics/Data integration" and takes all the

responsibilities of providing the software/hardware setup, maintenance, Storage

and Scaling and that too can be achieved in Days not months. Following

is a sample illustration

Big Data

Architecture on a AWS

Amazon S3:

is an Object level storage, all of your log files, data delimited files etc.

can be stored here and can be used for data processing/integration and later archived

on a cheap storage vault "Amazon Glacier".

Amazon

Dynamo DB: is a very strong No-SQL database and has a SQL like interface

levering the gap of Skills to learn for Complex/big data analysis.

Amazon RedShift:

is a huge, scalable, Peta byte level of Structured storage solution to server

the Fast data analysis and adhoc reporting backed by a distributed MPP engine (same

as Hadoop or any other MPP).

And Guess

what? All these components are highly secured, high available through AWS VPN

and Auto scale so no matter how small or huge the workload becomes the

architecture remains same and enhance instantly and automatically with minimal

effort.

So, we

suggest corporate should start with minimal investment and should put their use

cases to test and evaluate the ROI and with results slowly shift to Big Data

Environments.

Would

love to hear your feedback or share if you like it.

Thanks , Tahir

Aziz