One of the major challenges in the fast pace reporting and analytical needs is to produce the desired reports/dashboards from near real time data and to take the immediate decisions.

The current use case we have is an organization ( a Health care Provider) who would like to have the visibility of their health care facilities , current or near real time ( no later then 30 minutes old) data statistic around patients, departments , hospital equipment usage , equipment current status etc.

The challenge , limited budget and very limited time . In most of the case what development team end up doing is writing a "Operational Reporting Query" directly on the OLTP system and thus with a couple of queries /dashboards like this impact the real production environment. It works well for a short term period of time but on a longer run has a huge impact on Production environment.

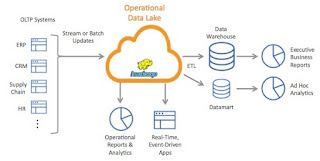

The solution we advise is to handle such cases by shifting the "Heavy processing work" to a cheap and strong candidate "Big Data". The revised work flow can look like something as below

The main idea is to offload the production work load to a cheap hadoop data lake environment , which can store the data and refresh it from source every 30 minutes. The reporting Adhoc/Operational Queries (SQL) can be easily translated to Hive(HQL) and thus with minimal changes you can save a lot of effort and cost on Production maintenance and above all with limited Budget you are still able to meet critical project deadline and without impacting any OLTP system.

Future perspective to this approach :

- we can take advantage of the Data lake to have the data reconciliation between different OLTP source e.g. Claims or GL transactions etc.

- we can do the Data integration to multiple heterogeneous sources e.g. business maintained files on share point with Operational reports etc.

- Can serve as a Staging area to the Data warehouse environment , offloading the Production access window and near real time data can be pushed to DWH.

- Ensure to meet the SLA for dashboard deliveries to business stake holders both Operational as well the DWH based Adhoc/Analytical dashboards.

- Also it can serve as a purpose to shift some of the complex and time taking "ETL" transformations from DWH or Data mart into Data Lake where as while pushing data to EDW or Data mart hadoop can apply those complex transformations and can send an "enriched" version of data set to DWH/Data mart.

With the advent of big data analytics, more and more companies are making use of the large amounts of data that they produce to better understand their customers and run their businesses more effectively. When used correctly,Big Data Analysis can help businesses create industry-leading products, services, and business models, generate new revenue streams, reduce costs, and discover new ways to make money.

ReplyDelete